The Next AI Era: Technology, Regulation, and Geopolitics

September 19, 2025

the policy gap

The AI Renaissance Is Heterogeneous — And Policy Must Catch Up

On a recent video call conducted almost entirely in a language I don’t speak, I gave myself a challenge: Could I build a real-time translator using AI before the call ended?

With only partial comprehension of the needed components and a handful of tools, I improvised a solution by combining open-source software, models, and NVIDIA’s CUDA libraries. Quickly iterating failed attempts produced a working hack. By the last few minutes of the call, I had working real-time audio translation. It was messy, but it worked, and it underscored how quickly artificial intelligence is advancing, and how rapidly improvised tools can move from experiment to practical deployment.

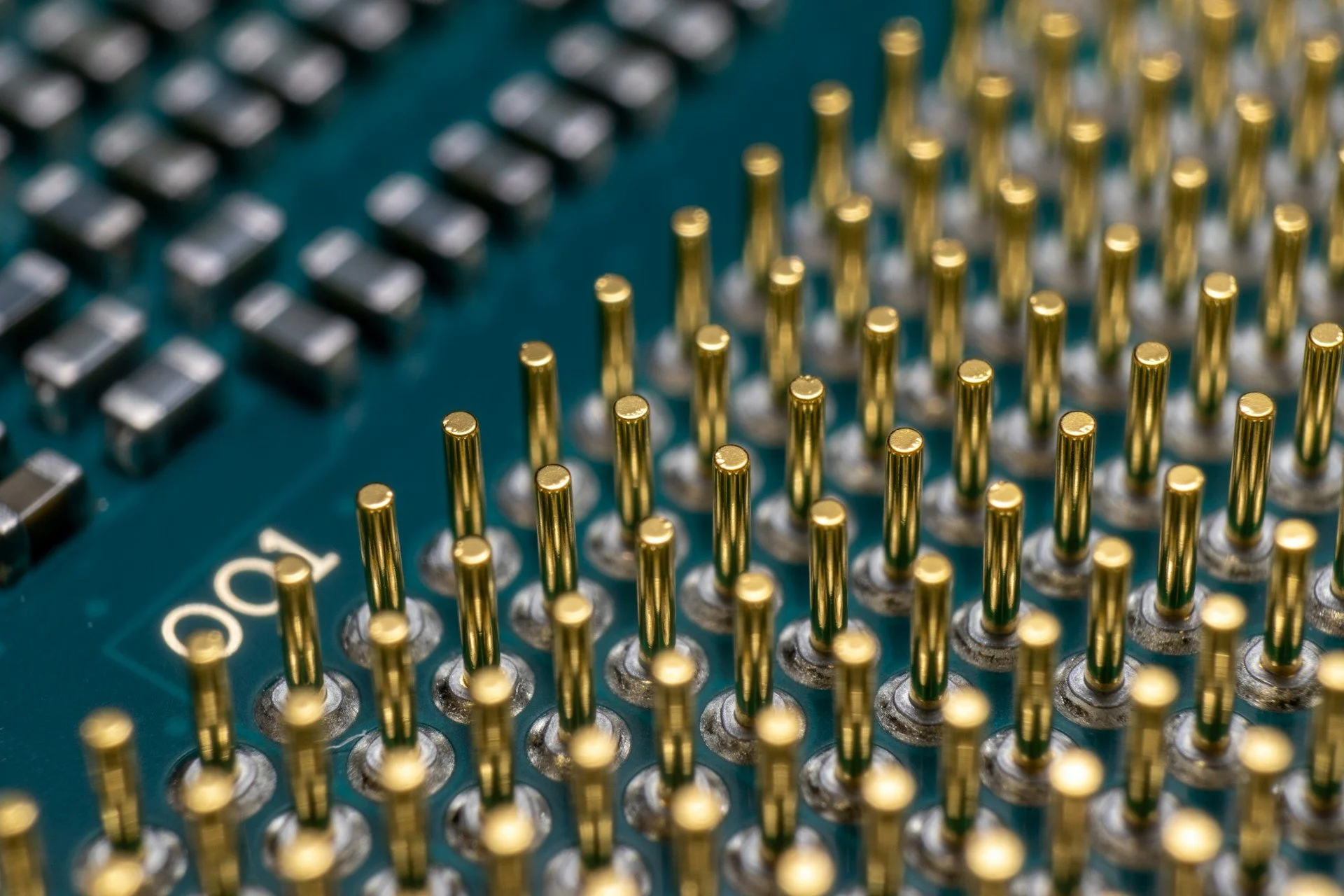

A macro of a silicon wafer. (Unsplash, Laura Ockel)

That pace of innovation captures the broader reality in 2025. Artificial intelligence has entered a defining new era, marked by explosive growth in hardware capabilities, the rise of open-source ecosystems, and the deployment of new model architectures at scale.

AI is no longer confined to research labs; it is reshaping industry, commerce, and policy at unprecedented speed. Beneath the headlines, however, lie complex forces: an intensifying hardware race, breakthroughs in analog compute, democratization through open-source models, and the accelerating integration of AI into robotics and multimodal systems. For leaders, the question is no longer whether AI will matter, but how to navigate its technological, regulatory, legal, and societal crosscurrents.

Hardware Competition and the Analog Frontier

NVIDIA remains the industry leader, with its H100 and H200 GPUs powering the majority of large model training and inference worldwide. Yet competition is intensifying. AMD’s MI300 series is achieving performance parity or better on inference workloads with superior efficiency. Google’s TPU v5p and Apple’s AI-centric A19 Bionic chip highlight advances in both hyperscale and edge computing.

At the same time, analog computing is gaining traction after decades of promise. Early deployments in sensor fusion and low-power inference demonstrate that analog chips could deliver order-of-magnitude improvements in energy efficiency and size.

If commercialized at scale, this shift would disrupt not just data center economics but also the regulatory frameworks that govern semiconductor exports, since most existing controls are written around advanced digital chips.

Analog compute falls outside many of today’s definitions of ‘advanced,’ creating gaps in export regimes and national security controls while reshaping supply chain dependencies.

The Rise of Open-Source LLMs

Perhaps the most consequential shift has been the surge in open-source large language models (LLMs). Enterprises across the U.S., Europe, and Asia are adopting open models such as Meta’s Llama 3, DeepSeek from China, and Nvidia’s Nemotron. These models now match or surpass many proprietary systems, while dramatically reducing inference costs.

The implications extend well beyond technology.

Open-source adoption is accelerating innovation, but also creating new governance challenges. Security, standards-setting, and regulatory oversight lag behind the speed of deployment. Governments are now grappling with how to ensure accountability in a world where anyone can fine-tune and deploy cutting-edge AI.

Multimodal AI and Robotics

Open-source innovation is also transforming multimodal AI (systems that can understand and generate across text, images, audio, and video).

New community-driven models now generate high-quality video, speech, and soundscapes that rival, in some cases outperform, closed commercial offerings. These tools are redefining media, communications, and creative industries, while raising urgent policy questions around intellectual property and misinformation.

Meanwhile, the robotics sector is undergoing rapid acceleration. Collaborative robots powered by deep learning are moving from factory pilots to mainstream commercial adoption.

Advances in computer vision and real-time perception are enabling robots to adjust dynamically in manufacturing, logistics, and healthcare environments.

The result is not just greater productivity, but a reshaping of supply chains, labor markets, and trade patterns, all with direct policy implications.

Heterogeneity as the Defining Trend

The defining feature of today’s AI landscape is heterogeneity.

New model designs from Mixture-of-Experts architectures to low-precision techniques prioritize efficiency and scalability. Hardware and software are increasingly co-designed, with closed systems integrating open-source components in ways that blur traditional boundaries. Nvidia’s release of CV-CUDA as open-source for high-throughput vision tasks is just one example of how proprietary and open ecosystems are merging.

This heterogeneity is not a technical curiosity. It is a structural reality that will determine who leads and who lags in AI.

Policy and Trade Implications

For companies, the challenge is no longer limited to choosing the right chip or model. The decisive factor will be aligning technological choices with trade and regulatory realities.

Export controls on semiconductors, divergent AI safety frameworks, and cross-border data rules both inside and outside of the U.S. are already shaping investment flows and competitive positioning.

The future will belong to those who can integrate technical innovation

while managing geopolitical, regulatory, trade, and national security pressures orchestrating across hardware, software, and regulatory environments. In this sense, AI is not only a technological revolution but also a test of strategic alignment. Those who can manage both sides of the equation will define the next chapter of the AI era.

Published by Basilinna Institute. All rights reserved.